I really wanted my next post to be about the blog’s automatic pipeline, but thanks to The Amazing Digital Circus, a very popular show with nearly 100 million people watching worldwide, I had to pivot.

In the show’s latest release (Ep 7: Beach Episode), the animated host, Kane, explicitly mentions the thought experiment. When asked a question, he dramatically replies, “Great question Gangal, for an answer let me consult the Chinese room.” The joke continues when another character questions what the Chinese Room even is, and Kane claims, “I believe it’s a fluent Chinese speaker who will give me advice”.

This brief mention, and the later plot point where characters try to break into the room to find a way out of the simulation, perfectly sets up the debate: Is Kane truly intelligent, or is he just following a grand set of instructions?

It’s a thought experiment I have already contemplated and am really fond of, so I wanted to talk about it today, as this profound idea has just been streamed to tens of millions.

The Thought Experiment

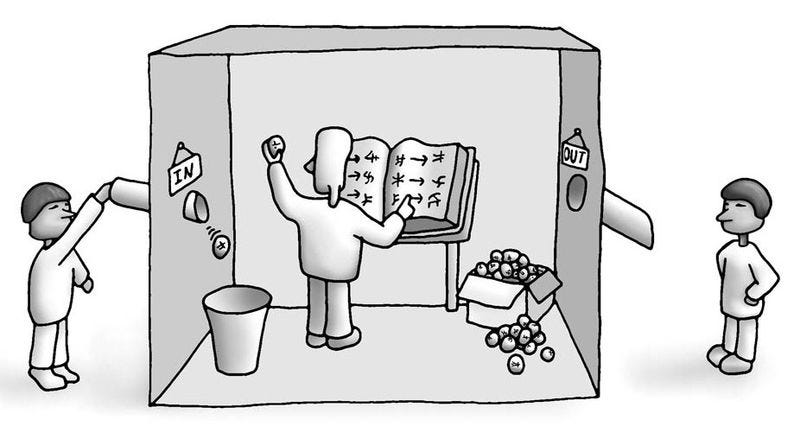

A person who has no knowledge of Chinese is locked inside a room with a bunch of books with Chinese answers to Chinese questions (similar to an input-output phrasebook). The person inside the room receives slips of paper on which questions have been written in Chinese. The person then appropriately answers the questions using the books found in the room.

For example the trapped person could be asked on the piece of paper: “What color is your shirt?” And he will use the phrasebooks and respond, using another piece of paper “Blue”

Since all of the answers and questions are written in Chinese, all the person did was accurately copy the symbols from the rulebooks onto the slips of paper, with no idea what any of that meant. In fact, he is wearing a red shirt.

Connection to AI

This thought experiment was originally presented in order to challenge the philosophical position of Strong AI. The idea that a computer programmed to simulate the human mind can actually be considered to have a mind and ’think’ in the same way a human does.

Meaning that according to this thought experiment, at the end of the day AI cannot simulate the human mind. it just receive inputs and produces outputs.

This thought experiment applies to a preemptive approach like the “book of Chinese answers to Chinese questions” or according to recent trends, instead of having a Chinese translation book, you have a chart that lets you predict the next word based on probability

In other words, The human mind have semantics (meaning and comprehension) for words, allowing us to genuinely understand the meaning of each word.

programs are entirely syntactic, They only manipulate words based on their predetermined rules that they follow - No matter how much data they have, they work on statistical pattern recognition and predictions. This workflow is not enough to constitute genuine understanding or a mind.

The Systems Reply

The primary objection to the Chinese Room is the Systems Reply. This counter-argument posits that the Chinese Room mistakenly focuses only on the part performing the calculation, not the entity that truly possesses the intelligence.

We can understand this using an analogy to computer architecture: The trapped man = CPU/GPU The phrasebook = the program

While the man (the processor) doesn’t understand Chinese, the entire system, the combined effort of the man executing the program written in the books: processing the inputs, and generating outputs, does understand Chinese. The consciousness, or “mind,” resides in the operation of the whole system, not just the component that performs the mechanical task.

It is important to remember that this argument is not solved, the Chinese Room remains a famous thought experiment with no definitive, universally accepted answer.

Difference from humans

Some of you might look at the thought experiment and think that: “in the end - our brains works just like the machine. we too work in an input-output pattern but we just haven’t reverse-engineered our brains”

This is a vast topic that I will maybe talk about in a separate post/posts but because I don’t like cliffhangers, let’s keep it brief and talk about a few points:

Machines don’t have Qualia. Qualia by definition is the internal and subjective component of sense perceptions arising from stimulation of the senses by phenomena.

AI is derivative intentionality. Their words have meaning only because of the human intelligence that created the training data and assigned meaning to the symbols.

Let’s say I ask an AI to describe a mountain. They don’t care or intend for their words to refer to the real thing, it just follows a high-probability path to generate text that refers to a mountain.

Hopefully this time, the next post will be about the blog pipeline 🙏